What is Supertonic TTS?

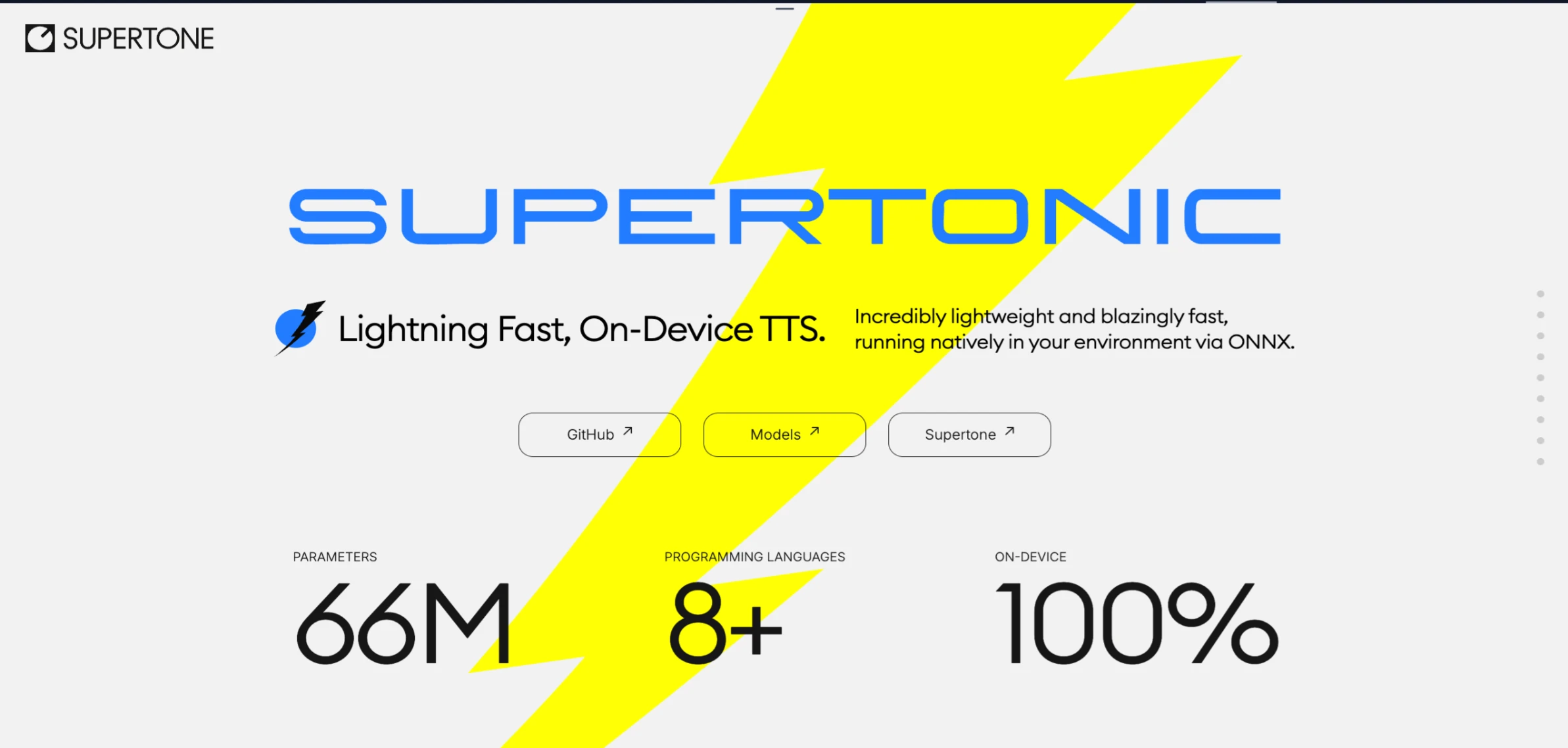

Supertonic TTS is a text-to-speech system built for speed and efficiency. It runs entirely on your device, meaning all processing happens locally without sending data to external servers. This approach provides complete privacy protection and eliminates network delays that can slow down cloud-based alternatives.

The system consists of three main components working together. A speech autoencoder creates continuous latent representations of audio. A text-to-latent module maps written text to these audio representations using flow-matching techniques. An utterance-level duration predictor helps control the timing and pacing of generated speech.

To keep the system lightweight, Supertonic TTS uses a low-dimensional latent space and compresses audio information temporally. The architecture employs ConvNeXt blocks, which provide efficient processing without excessive computational overhead. The entire model contains only 66 million parameters, making it practical for deployment on consumer hardware.

One notable feature is the system's ability to work directly with raw character-level text. It processes numbers, dates, currency symbols, abbreviations, and proper nouns without requiring pre-processing steps. This eliminates the need for grapheme-to-phoneme conversion modules and external alignment tools that add complexity to traditional text-to-speech pipelines.

The system uses cross-attention mechanisms to align text with speech during generation. This alignment happens automatically during the synthesis process, removing dependencies on separate alignment models. The result is a simpler workflow that maintains high-quality output.

Overview of Supertonic TTS

| Feature | Description |

|---|---|

| System Type | On-Device Text-to-Speech |

| Model Size | 66 Million Parameters |

| Performance | Up to 167× faster than real-time |

| Deployment | Local Processing, No Cloud Required |

| Text Processing | Raw Character-Level Input |

| Runtime | ONNX Runtime |

| Research Paper | arXiv:2503.23108 |

Key Features of Supertonic TTS

High Speed Performance

Supertonic TTS generates speech at speeds up to 167 times faster than real-time on consumer hardware like the M4 Pro. This means a one-second audio clip can be created in approximately 0.006 seconds when using WebGPU acceleration. The system maintains this performance advantage across different text lengths, from short phrases to longer passages.

Lightweight Architecture

With only 66 million parameters, Supertonic TTS requires minimal storage and memory. This compact size makes it suitable for deployment on edge devices, mobile applications, and embedded systems where resources are limited. The model's efficiency comes from careful architectural choices that maintain quality while reducing computational requirements.

Complete Privacy Protection

All processing occurs locally on your device. Text input never leaves your machine, and no audio data is transmitted to external servers. This privacy-first approach ensures sensitive information remains secure and complies with data protection regulations. Users maintain full control over their content throughout the synthesis process.

Natural Text Handling

The system processes complex text expressions without pre-processing. It correctly interprets financial amounts like "$1.5M" or "€2,500.00", time expressions such as "3:45 PM" or "Mon, Jan 15", phone numbers with area codes, and technical units with decimal values. This capability reduces the need for text normalization steps that complicate other systems.

Configurable Parameters

Users can adjust inference steps to balance quality and speed. Fewer steps produce faster results, while more steps can improve audio quality. The system supports batch processing for handling multiple texts simultaneously, improving throughput for applications that need to generate many audio samples.

Multi-Platform Support

Supertonic TTS works across different environments including servers, web browsers, and edge devices. It supports multiple runtime backends including ONNX Runtime for CPU processing and WebGPU for browser-based acceleration. This flexibility allows developers to choose the deployment option that best fits their application requirements.

Interactive Demo

Experience Supertonic TTS directly in your browser. The demo below allows you to test the system's capabilities with your own text input.

Performance Metrics

Supertonic TTS has been evaluated using two key metrics: characters per second and real-time factor. These measurements help understand how quickly the system can process text and generate speech.

Characters per second measures throughput by dividing the number of input characters by the time needed to generate audio. Higher values indicate faster processing. Real-time factor compares the time taken to synthesize audio relative to its duration. A lower real-time factor means faster generation.

On an M4 Pro with CPU processing, Supertonic TTS achieves 912 to 1,263 characters per second depending on text length. With WebGPU acceleration, performance increases to 996 to 2,509 characters per second. On high-end GPUs like the RTX 4090, the system can process 2,615 to 12,164 characters per second.

Real-time factors range from 0.015 for CPU processing to 0.006 for WebGPU on consumer hardware. This means generating one second of audio takes only 0.006 to 0.015 seconds, demonstrating the system's speed advantage over cloud-based alternatives that typically require network round-trips and server processing time.

System Architecture

Supertonic TTS uses a three-component architecture designed for efficiency. The speech autoencoder converts audio waveforms into continuous latent representations. This compression reduces the amount of data that needs to be processed while preserving important audio characteristics.

The text-to-latent module employs flow-matching techniques to map text sequences to these latent audio representations. Flow-matching provides a direct path from text to audio features, avoiding the complexity of intermediate representations used in some other systems. This approach contributes to both speed and quality.

The utterance-level duration predictor estimates how long each generated utterance should be. This helps maintain natural pacing and rhythm in the synthesized speech. The predictor works at the utterance level rather than phoneme level, simplifying the overall architecture.

Cross-attention mechanisms align text tokens with audio features during generation. This alignment happens automatically without requiring separate alignment models or forced alignment tools. The system learns these alignments during training, making the synthesis process more straightforward.

Temporal compression reduces the length of latent sequences, making processing faster. The system uses ConvNeXt blocks for efficient feature extraction and transformation. These architectural choices contribute to the model's small size and fast inference speed.

Language and Platform Support

Supertonic TTS provides inference examples and implementations across multiple programming languages and platforms. This broad support makes it accessible to developers working in different environments.

Python implementations use ONNX Runtime for cross-platform inference. Node.js support enables server-side JavaScript applications. Browser implementations use WebGPU and WebAssembly for client-side processing without server dependencies.

Native mobile support includes iOS applications and Swift implementations for macOS. Java implementations work across JVM-based platforms. C++ and Rust implementations provide high-performance options for systems programming. C# support enables .NET ecosystem integration, and Go implementations offer another server-side option.

Flutter SDK support allows cross-platform mobile and desktop applications. Each language implementation includes detailed documentation and example code to help developers get started quickly.

Use Cases and Applications

Supertonic TTS suits applications where speed, privacy, and offline capability are important. Accessibility tools can provide real-time text reading without network dependencies. Educational applications can generate speech for learning materials while keeping student data private.

Mobile applications benefit from on-device processing that works without internet connectivity. Embedded systems in smart devices can provide voice feedback without cloud services. Edge computing deployments can offer text-to-speech capabilities closer to end users, reducing latency.

Content creation tools can generate narration quickly for videos and presentations. Assistive technologies can provide immediate speech output for users who need text read aloud. Navigation systems can announce directions without relying on external services.

The system's ability to handle complex text expressions makes it suitable for applications that need to read financial data, technical documentation, or formatted content. The natural handling of numbers, dates, and abbreviations reduces the need for custom text preprocessing.

Advantages and Considerations

Advantages

- Extremely fast speech generation

- Complete privacy with local processing

- No internet connection required

- Small model size for easy deployment

- Natural handling of complex text

- Multiple platform and language support

- Configurable quality and speed trade-offs

- Zero latency from network requests

Considerations

- Requires initial model download

- Performance varies by hardware capabilities

- Model size still requires some storage space

- Quality may vary with inference step count

- GPU acceleration provides best performance

How to Use Supertonic TTS

Step 1: Install Dependencies

Begin by installing the necessary dependencies for your chosen platform. Python implementations require ONNX Runtime, while browser implementations need appropriate WebGPU support. Each language directory contains specific installation instructions.

Step 2: Download Models

Download the ONNX models and preset voices from the Hugging Face repository. Ensure Git LFS is installed to handle large model files. Place the downloaded assets in the appropriate directory for your implementation.

Step 3: Prepare Text Input

Prepare your text input. Supertonic TTS can handle raw text including numbers, dates, currency symbols, and abbreviations without pre-processing. You can input text directly as it appears in your source material.

Step 4: Configure Parameters

Adjust inference steps based on your quality and speed requirements. Fewer steps generate faster results, while more steps can improve audio quality. You can also configure batch processing if generating multiple samples.

Step 5: Generate Speech

Run the inference process to generate audio. The system will process your text and produce a 16-bit WAV file. Processing happens entirely on your device, so no network connection is needed during generation.

Step 6: Use Generated Audio

The generated audio file can be used in your application, saved for later use, or further processed as needed. The output format is standard WAV, making it compatible with most audio processing tools and media players.